quote:There has to be a joke in there somewhere about building a wall around Austin.

LCRA lists the drainage area of Lake Travis as 38,130 square miles. If the whole drainage area caught a 10 inch rain, that'd be about 6.3 trillion gallons of runoff.

Using stupid math (my patent is pending!), a 10 inch rain over the whole drainage area for Lake Travis would be enough to cover all of the city limits of Austin in 117 feet of water.

Lake Travis water level

24,053 Views |

144 Replies |

Last: 8 yr ago by Yuccadoo

I tried to do that calculation already, but couldn't find a stage-storage curve for the lake. If you find one, will you link it here?

quote:not an optimal way to do this, but you can at least get a feel for the numbers.

I tried to do that calculation already, but couldn't find a stage-storage curve for the lake. If you find one, will you link it here?

http://hydromet.lcra.org/lakevolume/

It does Travis and Buchanan combined but if you put 912 for Buchanan's level it calculates it with Buchanan empty.

quote:Agronomy nerd says an acre is 43,560 sq ft. and an acre inch of water is 27,154 gallons. So an inch over 40,000 sq ft is just under 25,000 gallons (24,935) So your estimations are high by about 20%.

For fun let's say on 40,000 sq ft of land (approx 1 acre) one inch of rainfall would result in 30,000 gallons of water.

Just for fun, say you have a one section ranch (640 acres) and a cloud exactly the size of your ranch forms overhead and rains itself out, dumping one inch of rain.

How much did the cloud weigh?

144,937,190 pounds. Kind of mind-blowing, isn't it? Now figure this weekend's projected rains of up to 5 inches over the entire Panhandle.....

This has gone from an Outdoor discussion, to a Political discussion, not to a Nerd discussion. WTF is going on!!!!

Hey, anyone have a link to the new lakes being built. I've always wondered how that would work nowadays.

Hey, anyone have a link to the new lakes being built. I've always wondered how that would work nowadays.

quote:Yea, forestry guy here and I know an acre is 43,560 sq feet, but our roof was only 4,000 sq ftquote:Agronomy nerd says an acre is 43,560 sq ft. and an acre inch of water is 27,154 gallons. So an inch over 40,000 sq ft is just under 25,000 gallons (24,935) So your estimations are high by about 20%.

For fun let's say on 40,000 sq ft of land (approx 1 acre) one inch of rainfall would result in 30,000 gallons of water.

; now if it had been 4,356 sq feet I could have been more exact with my math

; now if it had been 4,356 sq feet I could have been more exact with my math quote:They have these little things called "calculators"

our roof was only 4,000 sq ft; now if it had been 4,356 sq feet I could have been more exact with my math

quote:You can look up Lane City Reservoir and find some information.

This has gone from an Outdoor discussion, to a Political discussion, not to a Nerd discussion. WTF is going on!!!!

Hey, anyone have a link to the new lakes being built. I've always wondered how that would work nowadays.

As far as to how it works nowadays - figure 3+ years of regulations, permits and redoing permits. Plus another year once you actually get started of more permits.

And that is just for the work associated with the Colorado River, Jarvis Creek and an unamed tributary of Jarvis Creek that all fall within the Waters of the US jurisdiction.

quote:quote:You can look up Lane City Reservoir and find some information.

This has gone from an Outdoor discussion, to a Political discussion, not to a Nerd discussion. WTF is going on!!!!

Hey, anyone have a link to the new lakes being built. I've always wondered how that would work nowadays.

As far as to how it works nowadays - figure 3+ years of regulations, permits and redoing permits. Plus another year once you actually get started of more permits.

And that is just for the work associated with the Colorado River, Jarvis Creek and an unamed tributary of Jarvis Creek that all fall within the Waters of the US jurisdiction.

I know nothing about permits, but we had LCRA's Natalie Stoll speak to our Rotary group about them recently. One was an old quarry where the other will be similar to a giant retention pond, neither will be open to the public. Since they will be pumping water into and out of both, they didn't have some of the hurdles that damming a tributary or the main river would produce.

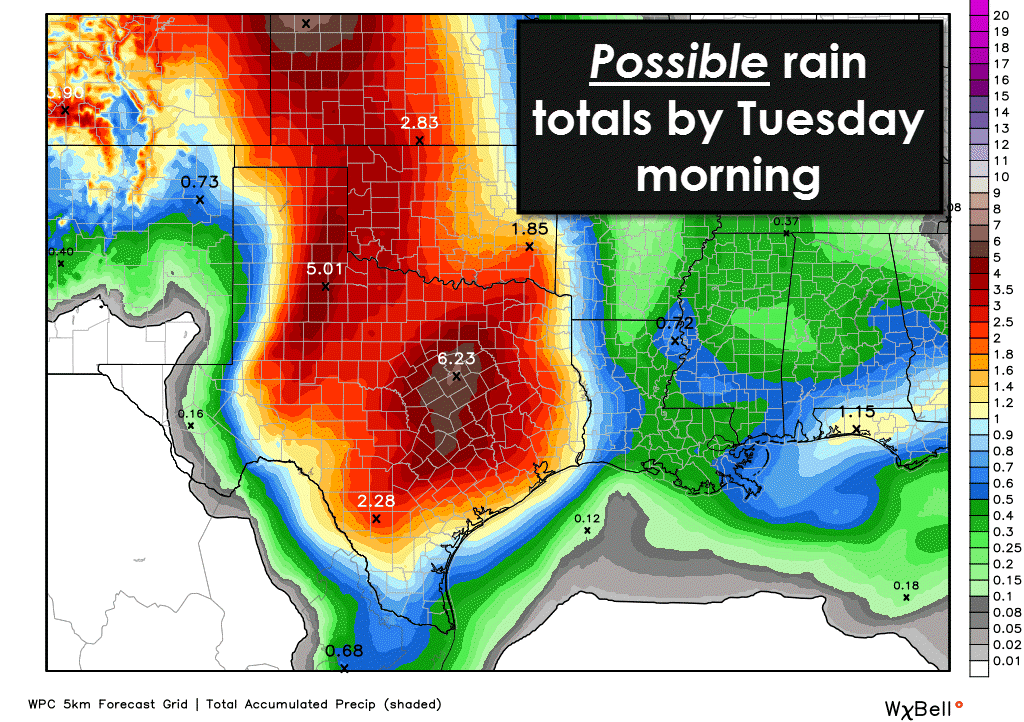

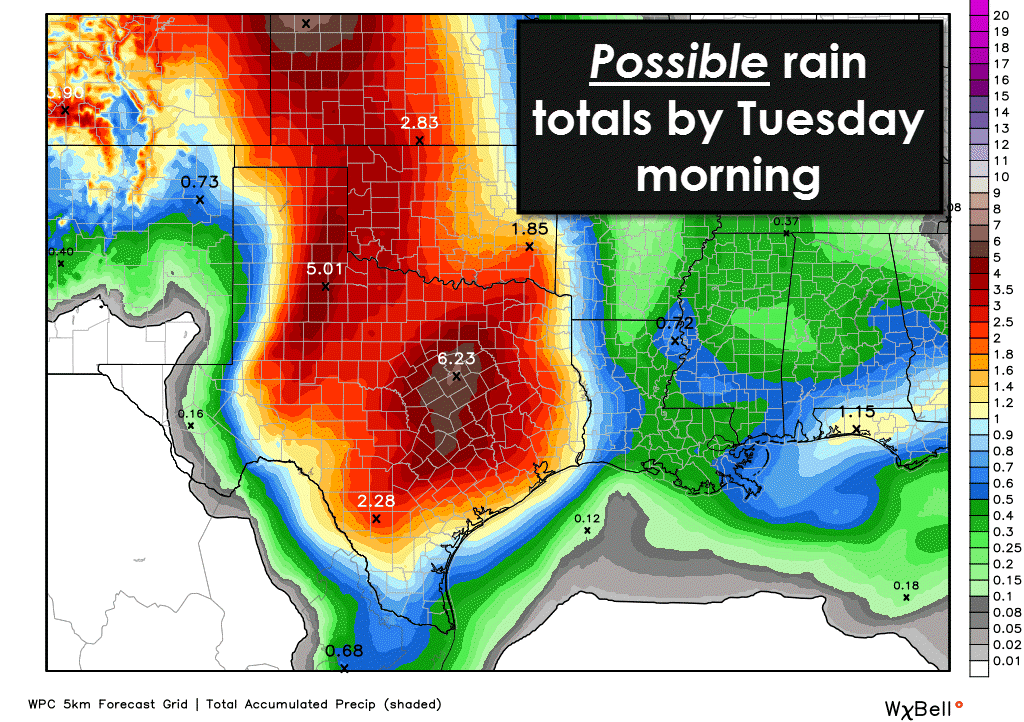

Regardless of if this fits in the politics, nerd or other category...

We have a ton of saturated ground out there that may look dry on the surface, but will not absorb much rain...

And...

We have rain coming....

Please be ready for anything in the coming days....

TEEX

We have a ton of saturated ground out there that may look dry on the surface, but will not absorb much rain...

And...

We have rain coming....

Please be ready for anything in the coming days....

TEEX

quote:

LCRA lists the drainage area of Lake Travis as 38,130 square miles. If the whole drainage area caught a 10 inch rain, that'd be about 6.3 trillion gallons of runoff.

Using stupid math (my patent is pending!), a 10 inch rain over the whole drainage area for Lake Travis would be enough to cover all of the city limits of Austin in 117 feet of water.

That's assuming 100% runoff directly into the lake, right? Some water will go into people's rain collection system, some will go into pools, a lot will drain into the ground (although I guess the aquifer and lake level are related), some will evaporate.

I guess what I'm asking is, given that drainage area, what % actually drains into Travis or into an underground aquifer that impacts Travis levels? I feel like it's something much less than 100% but as I think about it, maybe it is actually closer to 100%?

quote:Any answer would be a SWAG, but keep in mind runoff is largely a function of rate of rainfall, not simply the total amount that falls. It has to be a very complex "formula" that would take a computer program(s) to estimate, and they would still be debatable. I'd venture to guess that significantly less than 50% of any rain event reaches Travis.

I guess what I'm asking is, given that drainage area, what % actually drains into Travis or into an underground aquifer that impacts Travis levels? I feel like it's something much less than 100% but as I think about it, maybe it is actually closer to 100%?

Okay, enough silly math. Here's how it really breaks down.

From the perspective of a large drainage area (10+ square miles), the first 0.10 to 0.25 inches of rainfall produce no runoff because it fills natural depressions, pools, puddles, etc. Exception would be if there's been enough precipitation in the previous ~week to already fill those tiny reservoirs. The next 2 inches or so produce between 0.5 inches and 2 inches of runoff, depending on landuse & soil types. Sands and pastures produce less runoff, while clays, bedrocks, and urban areas produce more. After about 4 inches of rain has fallen, it pretty much all becomes runoff.

The fancy hydrualic models that the guys over at LCRA, USACE, etc use generally end up with about 10 inches of runoff for the 100-year event (which is 11.5 inches of total rain). Trouble is, over what portion of the watershed? Seeing as how Lake Travis is 38,130 square miles in size - that's way, way larger than even epic-level storms like TS Allison or TS Bill. You'll never get 10+ inches over the entire watershed; instead, some areas get 25 inches while others get 0.25 inches. The 1955 flood only averaged out to 0.45 inches of rain over the whole watershed. Talking about 10" across the whole watershed is silly and stupid because it has never happened and likely never will.

The dam operators use the flood pool to "absorb" the flood wave rushing down the watershed and then slowly release it over a few weeks or months instead of the few hours in which mother nature dumped it. The flood pool for Travis (assuming this is accurate) is 787,000 acre-ft, which is about 0.40 inches of runoff. The spillway starts running at elev ~714, so any flow after 714 will not be the initial rush of the storm but a small tail-end of the storm that trickles in days or weeks later.

From the perspective of a large drainage area (10+ square miles), the first 0.10 to 0.25 inches of rainfall produce no runoff because it fills natural depressions, pools, puddles, etc. Exception would be if there's been enough precipitation in the previous ~week to already fill those tiny reservoirs. The next 2 inches or so produce between 0.5 inches and 2 inches of runoff, depending on landuse & soil types. Sands and pastures produce less runoff, while clays, bedrocks, and urban areas produce more. After about 4 inches of rain has fallen, it pretty much all becomes runoff.

The fancy hydrualic models that the guys over at LCRA, USACE, etc use generally end up with about 10 inches of runoff for the 100-year event (which is 11.5 inches of total rain). Trouble is, over what portion of the watershed? Seeing as how Lake Travis is 38,130 square miles in size - that's way, way larger than even epic-level storms like TS Allison or TS Bill. You'll never get 10+ inches over the entire watershed; instead, some areas get 25 inches while others get 0.25 inches. The 1955 flood only averaged out to 0.45 inches of rain over the whole watershed. Talking about 10" across the whole watershed is silly and stupid because it has never happened and likely never will.

The dam operators use the flood pool to "absorb" the flood wave rushing down the watershed and then slowly release it over a few weeks or months instead of the few hours in which mother nature dumped it. The flood pool for Travis (assuming this is accurate) is 787,000 acre-ft, which is about 0.40 inches of runoff. The spillway starts running at elev ~714, so any flow after 714 will not be the initial rush of the storm but a small tail-end of the storm that trickles in days or weeks later.

quote:what an aptly used username.

Regardless of if this fits in the politics, nerd or other category...

We have a ton of saturated ground out there that may look dry on the surface, but will not absorb much rain...

And...

We have rain coming....

Please be ready for anything in the coming days....

TEEX

quote:

I tried to do that calculation already, but couldn't find a stage-storage curve for the lake. If you find one, will you link it here?

I just gave it a shot using CharlieBrown's link. It looks like the same storm that caused a 57 foot rise in Lake Travis in 1952 would have only caused a 30 foot rise had the lake started at full conservation pool. That would be 3 feet below the spillway and 39 feet below the top of the dam, not nearly as alarming as Coyote made it sound. This also assumes the absolute worst case scenario: flooding downstream is so bad that the LCRA can't open any flood gates during the rise in lake level.

quote:The primary reason for flash flooding in the Hill Country of Texas (ergo Lake Travis watershed) is the steep terrain. Not asphalt and concrete. As you mentioned, those externalities are mitigated by the mandated holding ponds that accompany them. But even without them (ponds), compared to the many millions of native, undeveloped acres of land, they (? acres of asphalt and concrete) make up only a tiny fraction of 1 % of the total surface area. It simply doesn't contribute as much to the total amount of flash flood waters; just total flood water.

Yes, the area has always had flooding issues because of the rock base, the elevation changes and the resulting gullies and canyons, the type of heavy rapid rainfall events that can occur in the region due to the local climate and intense frontal activity and long lines of supercells that can form, and you get a lot of rapid aggregation and channelization of rainfall.

What is changing over time is that people, myself included, are very drawn to those canyons and streams with their shade trees and such, and you have boatloads of people living in them now, and building as if the water doesn't rise 20 or 30 feet in a few hours. To add to that, we in fact HAVE been covering thousands and thousands of acres of rolling farmland and fields with asphalt and concrete, and then channeling that flow into storm drains that very rapidly dump that water into creeks and rivers so most of us can continue going about our business in heavy rain, which makes flood control models and data obsolete every few years. In the last decade or so we have begun to attempt to mitigate this effect with attempts to capture and hold runoff in basins and small reservoirs that have outlets with limited flow rates in order to delay the surge of runoff into the natural creeks and rivers, and it has helped, but we have a lot of catching up to do, and at the rate we build new drainage and add new burden to existing systems we have trouble really planning and anticipating the effects of our development on drainage. Adding small reservoirs on major side creeks that only limit flow rate at the outlet is probably the best strategy to limit flooding on the main river, but you have to have undeveloped park space for them to fill up into in a flood event until they can slowly release down to regular flow rate.

To the point, the Blanco River flood of last year was not significantly impacted by the "thousands and thousands of acres of asphalt and concrete" claimed to exist across that watershed by posts on this thread (if they even exist in the first place).

I may be crazy or wrong and am trying to recall my hydrology classes, but if you get 10 inches of rain, it doesnt make a difference whether it is paved or not. The upper soil becomes fully saturated nearly instantly and the bulk of the rain ends up as runoff. Unpaced areas are not a "sponge" that just soaks up and holds water.

That, and water underground still flows downhill and into creeks, streams and rivers, albeit, slower but it still makes its way there. Nature doesn't like maintaining a hydrolic gradient beneath the surface any more than it does above the surface.

That, and water underground still flows downhill and into creeks, streams and rivers, albeit, slower but it still makes its way there. Nature doesn't like maintaining a hydrolic gradient beneath the surface any more than it does above the surface.

Depends on the soil type, but clay soils especially really slow the infiltration rate as they absorb water. But they still take it in, and if you have grass or other litter to slow runoff, it can take in a lot over time.

Asphalt and concrete take in zero from start to finish.

Asphalt and concrete take in zero from start to finish.

That's wrong. If it was true, you wouldn't see detention or retention ponds built to serve virtually all new developments. That's the only way to avoid raising the 100 year floodplain downstream.

I completely agree channelized uneven terrain is the primary issue with flooding. I am saying on the localized level in urban and suburban areas our development changes how the water aggregates and flows over time and the results make local flooding unpredictable. Don't forget to add buildings to your coverage calculations. In the urban and suburban local areas around Austin, 1% surface coverage is very likely not correct in many places. It probably is more like 10% in suburbs and urban areas.

We humans tend to channelize drainage further to speed runoff from the higher ground we prefer to build on. I am simply saying that this results in a faster aggregation of runoff as it doesn't have to flow lamellarly over a natural earth and grass surface, which substantially re-tards the flow rate. (Between Apple autocorrect and texags filter, you can barely type the word re-tard, and my use of it here is entirely legitimate!). I would agree that with exceptional rain events, most of those factors matter much less.

I speak in regards to the channelization issue from experience. My parents neighborhood once had pasture acreage next door. A new masterplanned neighborhood was built on it with all the modern flood control design, and they built a huge flood control ditch between my parents neighborhood and the new one down to the natural creek that bordered both. In the next major rain event, my parents neighborhood had flooding on several streets that had never flooded before, not even during tropical Storm Allison when they got 20 inches of rain in 3 days. The culprit was the flood control design of the new neighborhood. It was so efficient that it locally flooded the creek, causing it to back up into my parent's neghborhood's flood control ditches. They actually had to install a system of flow gates to meter to flow rate and prevent water from getting pushed back up.

That's all I am saying: we tend to increase the rate at which runoff reaches natural channels not naturally sized to deal with water arriving at those rates, and then we like to build in those areas as well.

We humans tend to channelize drainage further to speed runoff from the higher ground we prefer to build on. I am simply saying that this results in a faster aggregation of runoff as it doesn't have to flow lamellarly over a natural earth and grass surface, which substantially re-tards the flow rate. (Between Apple autocorrect and texags filter, you can barely type the word re-tard, and my use of it here is entirely legitimate!). I would agree that with exceptional rain events, most of those factors matter much less.

I speak in regards to the channelization issue from experience. My parents neighborhood once had pasture acreage next door. A new masterplanned neighborhood was built on it with all the modern flood control design, and they built a huge flood control ditch between my parents neighborhood and the new one down to the natural creek that bordered both. In the next major rain event, my parents neighborhood had flooding on several streets that had never flooded before, not even during tropical Storm Allison when they got 20 inches of rain in 3 days. The culprit was the flood control design of the new neighborhood. It was so efficient that it locally flooded the creek, causing it to back up into my parent's neghborhood's flood control ditches. They actually had to install a system of flow gates to meter to flow rate and prevent water from getting pushed back up.

That's all I am saying: we tend to increase the rate at which runoff reaches natural channels not naturally sized to deal with water arriving at those rates, and then we like to build in those areas as well.

I suspect we see Buchanan full this week and maybe Travis at 105%.

quote:Yessssssss! Blue star this man!!

Even MORE fun (bad) math: a 10 inch rain over the whole Lake Travis drainage area would be enough water to cover the t.u. campus (431 acres) in 53,800 feet of water. That'd makes the hydrostatic water pressure at on the buildings 23,300 PSI - which is more than enough to crush all of the buildings.

Opened a gate into Ladybird Lake from Lake Austin at 4:40 PM today.

quote:

Opened a gate into Ladybird Lake from Lake Austin at 4:40 PM today.

How was the fishing there?

quote:That was on facebook, I don't think the fishing would be great there in a release though because Ladybird doesn't have much bank fishing really.quote:

Opened a gate into Ladybird Lake from Lake Austin at 4:40 PM today.

How was the fishing there?

I know some guys are upset about the release because it'll wash some of the matted grass that grows in LBL out

Overland sheet flow is incredibly slow. Undisturbed pasture or grassland can take days to drain after even moderate rains. That's not to say there wouldn't be flooding after deluges like what is happening now, but an unpaved and un-channelized environment drains orders of magnitude slower than what man has built. Even in the Hill Country. Road side ditches, gutters, culverts, and the like all drain much faster. Improved channels in urban areas, concrete lined channels that prevent meandering, and everything else we've done all speeds runoff and heightens the peak.

Now, weeks later when it's all over and the fields are dry, feel free to integrate the hydrographs of both a natural and an altered system. Yes, the area under both curves will be the same.

Now, weeks later when it's all over and the fields are dry, feel free to integrate the hydrographs of both a natural and an altered system. Yes, the area under both curves will be the same.

Quick, open some more rice paddys. St. Augustine lawns for ALL!!!!!!!

The arguments on the thread about development vs. natural are two different arguements. One side is talking about localized flooding (like the example of the pasture turned development next to a neighborhood). In these cases of course the development increases the chance for adverse effects from runoff. However, even in those cases the engineer should have accounted for it and designer some mitigation.

The other arguements is about watershed flooding in which case I agree that the effect of development t on the entire watershed is somewhat negligible. In many cases you can actually prove that development helps the aggregate runoff numbers of a watershed by getting the first "wave" passed through the watershed quicker before all the other aggregates get there.

The other arguements is about watershed flooding in which case I agree that the effect of development t on the entire watershed is somewhat negligible. In many cases you can actually prove that development helps the aggregate runoff numbers of a watershed by getting the first "wave" passed through the watershed quicker before all the other aggregates get there.

quote:Travis at 102%, Buchanan at 93%

I suspect we see Buchanan full this week and maybe Travis at 105%.

I know you're a farmer/rancher so maybe that's why your being precise, cause the cost/return is at upmost importance to you...

but you sound like an engineer!

but you sound like an engineer!

Buchanan was the last remaining hold out not at 100% was it not?

quote:

Buchanan was the last remaining hold out not at 100% was it not?

In Texas? There are a lot still very low.

http://www.waterdatafortexas.org/reservoirs/statewide

Choke Canyon, JB Thomas, meredith, Medina, plus a lot of smaller lakes.

Meant within the highland lakes chain and Colorado River drainage.

Highway 83 runs from Childress to Abilene to Laredo. It's pretty much the divide between the haves and the have nots. Most of West Texas is still very low, though quite a bit above two-three years ago.

J.B. Thomas is an interesting one, It went from esentially dry to almost full practically overnight, after an 18 inch rain on its watershed.

September 2014 thread about the quick rise of Thomas:

http://texags.com/forums/34/topics/2529388

J.B. Thomas is an interesting one, It went from esentially dry to almost full practically overnight, after an 18 inch rain on its watershed.

September 2014 thread about the quick rise of Thomas:

http://texags.com/forums/34/topics/2529388

quote:

Highway 83 runs from Childress to Abilene to Laredo. It's pretty much the divide between the haves and the have nots. Most of West Texas is still very low, though quite a bit above two-three years ago.

J.B. Thomas is an interesting one, It went from esentially dry to almost full practically overnight, after an 18 inch rain on its watershed.

September 2014 thread about the quick rise of Thomas:

http://texags.com/forums/34/topics/2529388

So the desert is dry and the sub tropics are wet like nature intended.

Featured Stories

See All

27:33

1d ago

11k

14:13

11h ago

1.9k

Efficiency, coaching experience trends point to A&M's true capabilities

by Luke Evangelist

9:44

14h ago

3.7k

El Mero Mero 14

Rich Get Richer - Dick Sports and Texas Athletics

in Billy Liucci's TexAgs Premium

2