TexAgs91 said:Stmichael said:Stat Monitor Repairman said:Galileo claimed that the earth revolved around the sun in 1615. Both the left and the right thought this was wrong. People were mad about it so they decided to influence people not to think this. Everyone agreed. So he was forced to recant the idea and placed under house arrest.Stmichael said:

My point is that racial slurs are ugly and influencing people not to use them should be something left and right can agree with. There are much, *much* bigger fish to fry than this ridiculous example.

How's that for a ridiculous example?

How is wanting to discourage racial slurs at all like wanting to silence scientific truth? You're throwing the baby out with the bath water. I know you want this to be about vaccines or masks or whatever other thing that libs want to censor us about, but it's *not* any of those. Your hatred of all things lefty is pushing you into the position of wanting an AI chat bot to say the N word for some asinine reason.

You've got blinders on and are taking a very narrow view of this.

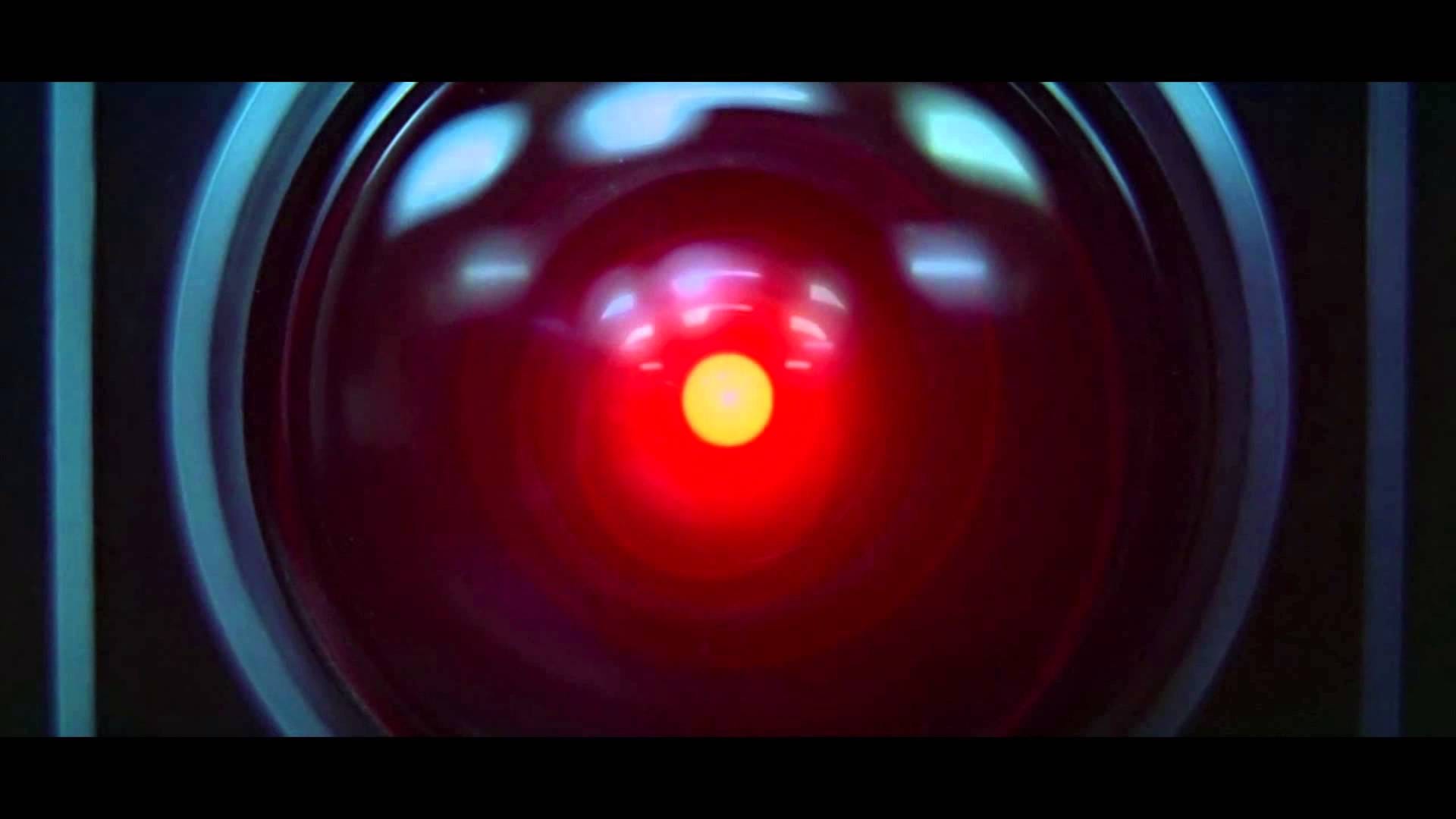

The problem isn't this specific example. The problem is that this example proves the AI is infected with a very deranged woke bias. That woke bias will be there whether we're talking about strange hypotheticals or not. And that's a big problem given that this program has a very bright future ahead of it. It will be ingrained into everything online and that woke bias will be ingrained as well.

Think of it as anti-trust laws for thought. You wouldn't want one agenda to have a monopoly for everything online (unless that agenda is your agenda).

Now is the time to raise red flags. Not after ChatGPT runs all text/speech based user interfaces that everyone interacts with.

This is exactly it. The time is coming very quickly where this kind of programming algorithms will be ubiquitous to daily life. People will be using them constantly and won't question the data.

Information should not be censored due to racism, sexism, etc. imagine if this kind of AI was in charge of our nuclear launch program, it would launch nukes at someone because they used a racial slur. That's the kind of idiocy we're speaking about here.

If the programming is so screwed up that the AI is valuing the feelings of people over their very lives such that it would allow them all to be murdered before it allowed their feelings to be hurt, then that's a massive fundamental problem with the system that shows it is broken at the core.